Role: UX Design / Lead Unity Developer

Originally joining the team to help think through UX design and digital dramaturgy, I was promoted to lead developer in order to build a theater cue-based code architecture to suit the project’s linear narrative structure.

Narrative Mapping

When I came on board, creators Dan and Quill had already begun work on a theater-style script for the experience. Using two parallel columns representing the two players’ phones, the script included text for the audio to be recorded by voice actors as well as “stage directions” describing the user interface and possible interactions with it.

I began mapping the narrative visually in Miro as a way to start translating the script into a structure that could be built in Unity.

Playtesting

I also helped the team design early playtests before the app was built using paper prototypes, bodystorming (walking through the experience path) on site while listening to an audio recording of a read-through, and instituting post-playtest questions from Schell Games.

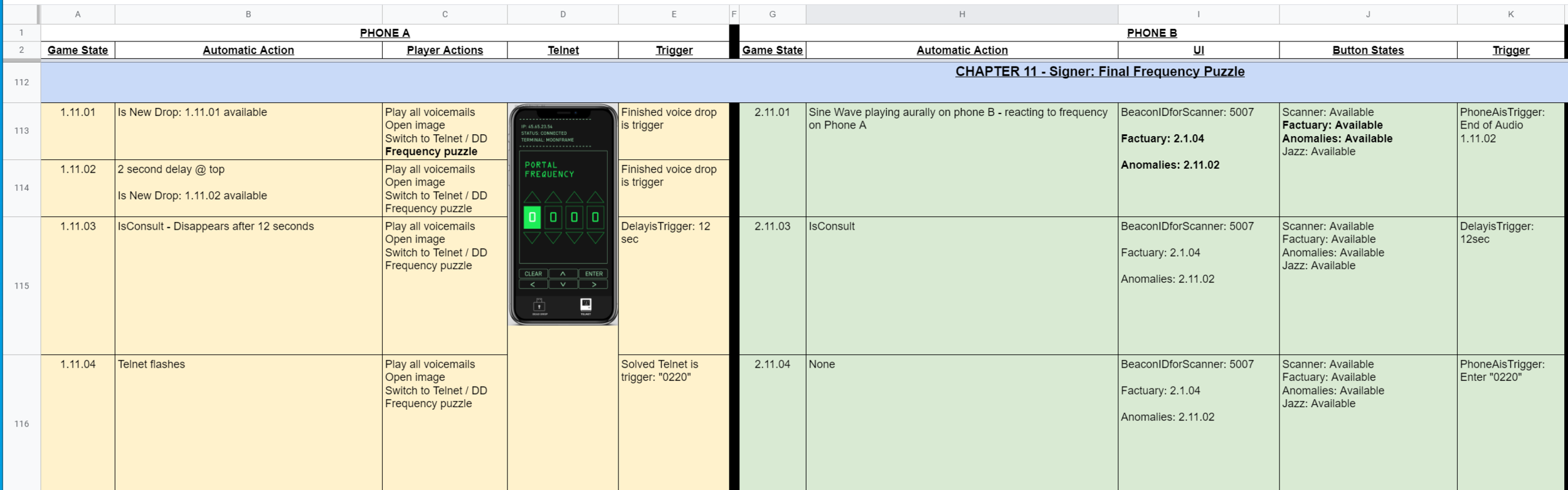

Theater script to C# script

I worked together with the stage manager and producer to translate the theater script into a detailed design document for the app. We started with the idea of the “robot stage manager.” Traditionally, a human stage manager watches a performance while referring to a theater script that notes technical cues, allowing them to execute lighting shifts, sound effects, etc. at the appropriate moments in relation to the actors' speech and movements. Our robot stage manager would need to trigger UI shifts and new audio clips in the app based on the users’ clicks, Bluetooth beacon proximity, as well as the progression of the narrative. From this concept, we developed the robot’s cueing script, from which I was then able to build the Unity project. This unique design document breaks the experience into cues that each include:

automatic actions, which take place at the start of the cue (such as a button flash or new voice drop banner)

player actions that are possible during the cue (such as pressing a button or playing a voice drop message)

triggers that cause the app to move into the next cue (such as an audio clip finishing, a Bluetooth beacon’s proximity threshold being reached, or a puzzle being solved)

Architecture

Based on Ryan Hipple’s 2017 Unite talk, I built a custom cueing system inside Unity using scriptable objects & events.

Drag + drop inspector controls made it easier for theater practitioners to collaborate on building the app. With each cue represented by a scriptable object, the stage management team was able to program cues similarly to the way cues are programmed in theatrical show control software.

Because I designed this interface to match it, the “robot script” served as an effective meeting point between the app and the original theater script where the entire team could confer fluently about specific moments of player interaction and narrative flow throughout development.

Examples of the mobile interactions I programmed for the project: